Artificial Intelligence (AI) has evolved rapidly over the past few years. Yet one of the biggest challenges remains: how can large language models (LLMs) provide...

Slurm GPU: Optimising high-performance workloads on Kubernetes

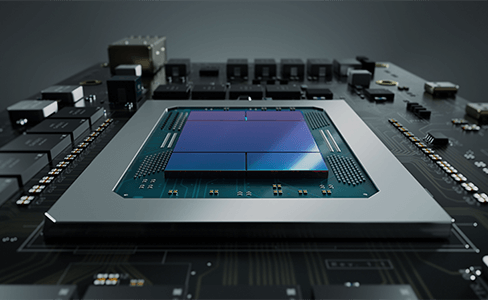

In today’s world of advanced Artificial Intelligence (AI) and Machine Learning (ML), managing large-scale computing workloads efficiently is a critical challenge for enterprises. As businesses deploy massive models such as Large Language Models (LLMs), the need for powerful, well-managed GPU infrastructure has never been greater. Traditional systems like Slurm have long been trusted for High-Performance Computing (HPC) workload management, but the modern AI era demands more flexible, cloud-native approaches.

Tata Communications bridges this gap by combining the proven efficiency of Slurm GPU scheduling with the scalability and agility of Kubernetes. Through its AI Cloud platform, powered by dedicated BareMetal GPUs, Tata Communications delivers the ideal environment for training, deploying, and scaling AI models efficiently and securely.

Driving enterprise AI performance with Slurm GPU scheduling

Efficient workload management lies at the heart of every successful AI deployment. Slurm GPU scheduling ensures that computing resources are utilised effectively, reducing idle time and improving overall performance. In traditional HPC environments, Slurm has been widely used to distribute and manage computing jobs across multiple nodes.

Tata Communications enhances this concept by integrating Slurm principles into its cloud-native orchestration layer. The platform uses a CNCF-certified Kubernetes system to dynamically allocate GPU resources. This allows enterprises to scale experiments efficiently and maintain consistent performance, even for complex inferencing and training tasks.

By combining the intelligence of Slurm GPU scheduling with Kubernetes’ dynamic scaling capabilities, Tata Communications provides an environment that can handle AI workloads of any size, from small-scale research projects to mission-critical enterprise deployments.

Empower your business with enterprise-grade Kubernetes built for security, scalability, and AI innovation. Explore how Tata Communications can help you run and protect your cloud-native and AI workloads today.

Leveraging Slurm for GPU resource management in Kubernetes environments

In modern AI workflows, effective GPU resource management is essential. Slurm Kubernetes integration represents the next evolution of workload orchestration. It brings together the strengths of Slurm’s job scheduling system and Kubernetes’ container orchestration, enabling a seamless balance of resource allocation, scalability, and reliability.

GPU-as-a-Service architecture serves as the foundation for this integration. This setup ensures that high-performance GPUs are available on demand without the resource contention that can occur in virtualised environments. Each GPU node is optimised for maximum throughput, ensuring predictable and consistent performance.

The orchestration layer simplifies deployment by providing a pre-optimised stack with drivers, operators, and frameworks already installed. This reduces configuration overhead and speeds up deployment times, enabling developers and researchers to focus on innovation rather than infrastructure management.

Best practices for configuring Slurm for high-performance GPU workloads

To achieve peak efficiency in AI training and inferencing, Slurm GPU configurations must be carefully optimised. Tata Communications’ cloud platform has been engineered to eliminate bottlenecks and maximise GPU performance.

Here are some key configuration practices followed in the Slurm Kubernetes environment:

- Accelerated GPU synchronisation: Using non-blocking Infiniband technology, GPU synchronisation during large training jobs becomes faster and more efficient. This ensures that multi-GPU workloads scale smoothly.

- High-speed parallel storage: A dedicated High-Speed Parallel File System using the Lustre protocol supports intensive data transfer between storage and GPUs. With read speeds of 105 GB/s, write speeds of 75 GB/s, and 3 million IOPS, AI models can access data rapidly, reducing training times.

- Optimised GPU selection: Tata Communications supports advanced accelerators such as the Nvidia L40s, which offer ray tracing and high throughput capabilities. These are ideal for complex tasks such as multi-modal inferencing that combine text, images, and video.

These optimisations ensure that Slurm GPU workloads deliver consistent performance even under heavy computational demand, making them suitable for research institutions, financial modelling, or large-scale AI product development.

Understand your AI infrastructure costs clearly and plan ahead with confidence.

Check Pricing Now to explore flexible and cost-effective options for your Slurm GPU workloads.

Real-World applications: S GPU in AI and ML workloads

The power of Slurm GPU orchestration extends across multiple industries and use cases. Below are some Slurm GPU examples demonstrating how this framework supports real-world enterprise AI workloads:

- AI Research and development: Universities and research centres use Slurm-based GPU scheduling to efficiently allocate computing resources for model training and simulation.

- Manufacturing quality control: Computer vision models powered by GPUs like Nvidia L40s can process and analyse thousands of images per second to detect production defects.

- Healthcare and diagnostics: Slurm-managed GPUs help train models that analyse medical imagery or genomic data with high accuracy.

- Enterprise AI and LLM training: Businesses developing large-scale language models use Slurm Kubernetes environments to manage distributed training efficiently.

These Slurm examples highlight how integrating Slurm scheduling within Tata Communications’ AI Cloud enables scalable, high-performance computing for diverse business challenges.

Monitoring, insights, and optimisation for Slurm GPU jobs

For enterprises managing mission-critical AI workloads, visibility and control are essential. Tata Communications provides advanced observability and monitoring capabilities for Slurm GPU jobs.

Using integrated tools such as Grafana, Prometheus, and Alertmanager, organisations can monitor resource usage, performance metrics, and job statuses in real time. This proactive approach ensures that workloads are optimised continuously and potential issues are detected before they impact performance.

Additionally, Infra Monitoring and Log Management tools enhance transparency and enable administrators to maintain compliance and operational efficiency. These insights help enterprises fine-tune their Slurm Kubernetes configurations for even greater performance and cost savings.

Use the Tata Communications Cloud Pricing Calculator to estimate your AI and GPU costs in minutes. Gain full transparency into pricing for compute, storage, and workload management.

Final Thoughts on Slurm GPU

The combination of Slurm GPU scheduling and Kubernetes orchestration represents the future of high-performance AI computing. Tata Communications delivers this powerful framework through its AI Cloud infrastructure, enabling enterprises to train, deploy, and scale workloads efficiently while maintaining robust security and predictable costs.

By leveraging dedicated GPUs Solutions, advanced networking, and cloud-native orchestration, Tata Communications empowers organisations to achieve faster innovation and higher ROI. Whether optimising data-intensive AI pipelines or running complex inferencing models, the Slurm GPU environment ensures speed, reliability, and scalability.

Ready to transform your enterprise AI strategy? Schedule a Conversation with Tata Communications today to discover how Slurm GPU and cloud-native orchestration can elevate your AI performance.

FAQs on Slurm GPU

How does Slurm GPU enhance resource utilisation for AI and ML workloads?

Can Slurm integrate seamlessly with Kubernetes for enterprise GPU management?

What are practical Slurm GPU examples for efficient machine learning model training?

Explore other Blogs

What is GPU as a Service (GPUaaS)? GPU as a Service (GPUaaS) is a cloud-based solution that offers high-performance Graphics Processing Units (GPUs) to users on demand....

Artificial Intelligence and High-Performance Computing (HPC) are shaping the next wave of digital transformation. From training massive language models to running...

What’s next?

Experience our solutions

Engage with interactive demos, insightful surveys, and calculators to uncover how our solutions fit your needs.

Exclusively for You

Get exclusive insights on the Tata Communications Digital Fabric and other platforms and solutions.